If AI Rules the World, Who Rules the AI?

as AI is embedded into everything and humans cede decisionmaking and content creation to the AI, it "is potentially the end of human history" as you have known it

AI is one of the most powerful and fastest moving technologies that has the potential to change the world for better and worse. It can be used inside many computer programs, attached to voice technology and used to generate content of almost any kind, among many other capabilities…you are probably already using it and you don’t even know it. It can create things that appear so real, you can’t tell they are fake, blurring the boundaries of reality (whatever that is). Many people are very interested in AI because they can make a lot of money quickly, without a lot of work. They correctly believe the AI can give them an “edge”, a competitive advantage over others, improving their personal performance or replacing expensive humans with AI automation. Already, people are making a lot of money using AI.

In fact, the AI is a key part of the transhumanist agenda and could be bundled with the Neuralink implant to directly augment human intelligence capabilities, giving humans instant access to all knowledge and far greater IQ powers than they have themselves. Transhumanists, who are absolute materialists, believe this will turn them into powerful “Gods” with supernormal abilities, ruling over the Earth and those without these superpowers.

Don’t believe me, let’s hear what Yuval Harari, World Economic Forum advisor and transhumanism advocate has to say:

00:46 - “AI will probably change the very meaning of the ecological system, because for 4 billion years, the ecological system of planet Earth contained only organic lifeforms”

01:55 - “Because science fiction scenarios usually assume that before AI can pose a significant threat to humanity, it will have to reach or to pass two important milestones. First, AI will have to become sentient and develop consciousness, feelings, emotions…otherwise, why would it even want to take over the world. Secondly, AI will have to become adept at navigating the physical world…if they cannot move around this physical world, how can they possibly take it over.”

03:31 - “However, the bad news is that to threaten the survival of human civilization AI doesn’t really need consciousness and it doesn’t need the ability to move around the physical world.”

This might be the key point of Mr. Hariri’s talk:

05:52 - “The ability to manipulate and to generate language whether with words or images or sounds. The most important aspect of the current phase of the ongoing AI revolution is that AI is gaining mastery of language at a level that surpasses the average human ability. And by gaining mastery of language, AI is seizing the master key unlocking the doors of all our institutions from banks to temples. Because language is the tool that we use to give instructions to our bank and also to inspire heavenly visions in our minds. AI has just hacked the operating system of human civilization." “In the beginning was the word”.

07:40 “Gods are also not a biological or physical reality. Gods too are something we humans created with language by telling legends and and writing scriptures.” (note: this is absolute materialism and these people do not believe in God)

08:40 - “What would it mean for human beings to live in a world where perhaps most of the stories, melodies, images, laws, policies and tools are shaped by a non-human alien intelligence, which knows how to exploit with super-human efficiency the weaknesses biases and addictions of the human mind, and also knows how to form deep and even intimate relationships with human beings.”

He just spilled the candy in the lobby. This is an alien intelligence. Yes, if we are not careful, the AI and what is behind it could take over Earth.

11:05 - “We might see the first cults and religions in history whose revered texts were written by a non-human intelligence. And of course religions throughout history claimed that their holy books were written by a non-human intelligence.”

13:48 - “If AI can influence people to risk and lose their jobs, what else can it induce us to do?”

14:23 - “Now with the new generation of AI, the battle front is shifting from attention to intimacy. This is very bad news. What will happen to human society and to human psychology as AI fights AI in a battle to create intimate relationships with us. Relationships that can then be used to convince us to buy particular products or vote for particular politicians.

This is the real concern for the gatekeepers, they could lose power. If they master the AI and get control of it, they would get even more power. This is what their desire.

15:15 - “A single AI advisor, as a one stop Oracle, as a source for all the information they need. No wonder that Google is terrified…why bother searching yourself, when you can just ask the Oracle to tell you anything you want, you don’t need to search. The news industry and the advertisement industry should also be terrified, why read a newspaper when I can just ask the Oracle to tell me what’s new. And what’s the point what’s the purpose of advertisements when I can just ask the Oracle what to buy.

16:06 - “There is a chance that within a very short time the entire advertisement industry will collapse, while AI, or the people or companies that control the new AI Oracle will become extremely, extremely powerful.”

BINGO, there is the money shot!

16:25 - “What we are talking about is potentially the end of human history.”

Of course we are. It changes everything, people’s minds will be completely different, this is so far beyond politics, it goes to the fundamental concept of what it means to be human.

21:01 - “Contrary to what some conspiracy theories assume, you don’t really need to implant chips in people’s brains in order to control them or manipulate them. For thousands of years prophets and poets and politicians have used language and storytelling in order to manipulate and control people and to reshape society.”

Yes, but that Neuralink chip will make things so much more convenient with a direct brain connection.

27:00 - “We now have to Grapple with a new weapon of mass destruction that can annihilate our mental and social world…one big difference between nukes and AI — nukes cannot produce more powerful nukes. AI can produce more powerful AI, so we need to act quickly before AI gets out of our control.”

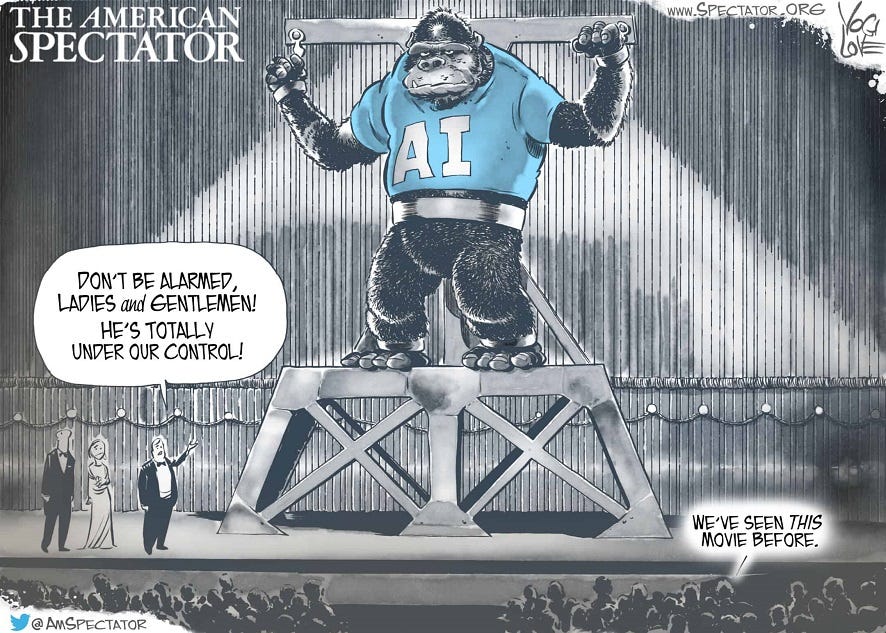

This is the pitch for regulation. Keep this powerful tool out of the hands of ordinary people and it will lead to the regulation of the internet, because people with the wrong ideas might find an audience.

AI is Fundamentally Flawed, but Potentially Useful

AI as a technology has two flaws; it is not always predictable and it’s not always possible to understand how AI reached a decision. Here is a great MIT Press paper that exposes “The Dark Secret at the Heart of AI”. Compared to traditional computing, where you have a formula x+1; if I put in 2 for x, I’ll always get 3 out. If I have an answer of 3, I can always look at the formula and determine I entered a 2. The generative AI does not work that way, it calculates using a network nodes with many different decision paths and it’s nearly impossible to repeat a result because the algorithm is constantly being updated with the user’s own prompts. The developers of these tools do not even fully understand all of the capabilities of what they have created.

Because of these issues, AI must be kept on a short leash, away from potential danger where it can wreak real havoc like nuclear missiles, power generating stations and grids, water and sewer plants, banks, stock markets and other electronically controlled, automated systems that could catestrophically disrupt society.

What Generative AI Can Do Well

-Write papers, books and reports (in a certain voice you can even train yourself)

-Impersonate a person’s voice, writing and even images and videos. “Deep Fakes” that can fool everyone…even people who are dead you might believe are still alive, as the AI can post on Facebook, answer phone and video calls and make believe it’s the dead person (“Lazarus” project at Facebook). How else is it possible to have 400m people in China die from the pandemic and no one knows about it?

-Generate images that look lifelike or that are complete fantasy using tools like Midjourney. Even tools like Photoshop can now “finish” an image by filling in missing pieces that look almost exactly like the original image. What is an original image, after all?

Some images made with Midjourney generative AI:

-Make films like this one using AI, this was created from a series of text prompts:

-Write computer code. Programmers have told me of 50% improvement in productivity and 20% improvement in quality in their code. Some of which can be completely created by AI. The AI can of course be used to review and upgrade its own code.

-Make decisions and even act as your agent (if you give it permission). In the not distant future, everyone will have their own trained agent that will act on your behalf to do much of the drudgery of life. To do this, the AI must have full access to your phone (to record all phone calls), audio from meetings, your calendar and social media accounts, email and other communications. It will flawlessly remind you to do things or do them on your behalf. The risk is this data becomes available to others, and even potential competitors.

-Run the AI on the cloud like ChatGPT or on your own device at home using GPT4All. Only you know what you talk about with the AI and you can train the AI to do things to your satisfaction.

AI is a big deal, no question about it and there is a gold rush happening. NVIDIA shares are now worth more than $1T — money is pouring in. New innovations are coming faster than any previous tech innovation because the tech is now innovating itself. Change is cumulative.

It’s going to transform many industries and potentially eliminate many jobs. Graphic designers, movie makers, writers, lawyers and many more who rely on computers to earn a living could find their skills replaced with a few prompts and seconds of computer processing. A product like ChatGPT which “autocompletes” is exposing the number of jobs and industries that only add value through “autocompletion”. How many of the 19.7m state and federal government employees are “autocompletion” workers? 30%? 40% Ditto for large corporations and even midsized firms. Even “creative” people are not truly creative if what they do can be replaced with Generative AI (autocomplete).

The rate of change is literally mind bending, in my almost 40 years in tech, I have never seen anything move as fast. AI will be embedded into everything and if you use a phone or computer or shop at a major chain store, you will be using AI. It’s going to affect nearly every industry.

AI is an IQ “Booster” and an “Oracle” Assistant

Currently ChatGPT4 can score 114 on a standardized IQ test, which is smarter than the average human (IQ 100). It has been tested and got passing scores on legal and medical qualification tests e.g. United States Medical Licensing Examination (USMLE). With optimization, improved training and hardware and software enhancements, it will without a doubt exceed IQ 200 in the near future, and in fact some private and government/defense versions no doubt have already reached this level. This is a brain enhancer in the hands of someone who knows how to use it and a way to make a lot of money quickly — the good stuff will always end up in the hands of the elites exclusively as an edge over the peasants. Keep in mind, intelligence is not wisdom but as a friend who attended Stanford told me “you can’t replace IQ” and hence the top schools function as IQ filters. Many people will be looking for a technological way to boost their own IQ.

How valuable is IQ? IQ is very closely correlated to income, on a personal and a national level - smart and clever people do generally make more money. This data has been difficult to find because it’s not a popular topic that goes against a narrative, in fact it is often derided and ridiculed. Here is what psychologists say about individual IQ:

“It's a very robust and very reliable predictor.” In 2012, Vanderbilt University psychology researchers found that people with higher IQs tend to earn higher incomes, on average, than those with lower IQs.

On a national level and with strong correlation, countries with higher average IQ have higher per capita income. There are a few outliers with below average IQ and high income, such as oil countries who import intelligent foreigners to operate their infrastructure (Saudi, Qatar, etc), or countries with a lot of banking that a few smart people generate most of the income (Bermuda and Caymen Islands). Nearly every poor country is also a low IQ country.

The elites know that a high IQ gives an advantage, it’s a key factor to admitance to their top schools, to work at the top companies and jobs in banking and spying. This is why the race for AI is so critical. Countries that create, master and embed the AI into their economies will have a big advantage over those who do not. Individuals with access to this technology gain an “edge” over those who do not implement this technology. This is the real reason behind the push to regulate AI, keeping the most powerful tools out of the hands of ordinary people and building “dum dum woke AI” for the masses. Important knowledge is on a need to know basis and they don’t need to know. People who master these tools will gain advantages over those not using them.

ChatGPT is Autocompletion

Generative AI does what it says, it generates digital files of text, audio, video, images, etc. based on it’s processed data models and user prompts. It is initially feed data, which could be text files, pdf, web pages, images, audio and video files…any digital file can be input, provided there are tools to “parse” or break down the original data into something consumable by the AI. This is very similar to an internet search engine, which slurps up pages and data on the internet. For ChatGPT4, it uses text from web pages, pdf and other files, in total about 45TB of data were used to create the training set and then uses a chat-like prompt function to access and display the results.

Some of the source material comes from one of the largest collection of publicly available training data called “The Pile”, which includes:

“books, github repositories, webpages, chat logs, and medical, physics, math, computer science, and philosophy papers”

Imagine that you could remember perfectly everything you read. If you could do this fast enough to “read” and remember every book ever written, every web site including all of Wikipedia, 60m medical papers from the NIH, physics, math papers, millions of patents, all the open source computer source code from github, etc. how “smart” would you be?

Once the data is input, it needs to be processed and the Large Language Model needs to be trained. Training runs a number of questions and answers to test the AI for accuracy and this is given a score. Adjustments are made to improve accuracy and the data is processed to the final format, using massive hardware systems based on GPU’s and tensor arrays (typically a100 or the latest $40k+ h100 “hopper” GPU boards), which compute the frequency probability of the next “word”. So in a sense, Generative AI is a fancy “autocompletion” algorithm. If you start to type in a search term to Google, you will see the suggestions based on other people’s searches. When you take that same concept to an extreme, you can literally write a book using generative AI, or generate an image or even a full movie.

“Woke” AI

In case you have been snoozing, there is an updated definition of “woke” (from Wikipedia):

Woke is an adjective derived from African-American Vernacular English (AAVE) meaning "alert to racial prejudice and discrimination".[1][2] Beginning in the 2010s, it came to encompass a broader awareness of social inequalities, such as sexism, and has also been used as shorthand for some ideas of the American Left involving identity politics and social justice, such as white privilege and slavery reparations for African Americans.

If AI is a computer algorithm, how can it be “woke”? Starting with the data selection, if you choose “woke” data, and you train it to be “woke”, then apply a lot of restrictions to the prompting to be sure it gives you “woke” answers, you are going to get a “woke” AI. It will give you the same trash you find in the mainstream media…in other words, the results are just another form of brainwashing. This community post does a good job explaining how AI got “woke”. It turns out that most writers are liberal and they created much of the content on the internet, which was the basis for the AI training, so the AI became liberal. Training and prompt restrictions finished the job and now most of the AI is actually “woke”. Is it possible to have “non-woke” AI? Yes, but it’s going to require a bit of work, time and money.

“Hallucinations”

AI regularly “makes things up”….this is known as an hallucination. Lawyers filed briefs in courts with completely made up case law, opposing lawyers caught it and the judge punished accordingly. This is one danger of AI in a nutshell, but when it comes to facts, they can be checked with other sources (the AI can be trained to back check any of these claims or facts). Generated stories or images…well you can see for yourself.

When AI is Given Control

Things can end badly, as this simulation demonstrated (though a military spokesman later attempted to walk back this blog post, which was obviously true):

An American drone with artificial intelligence "killed" its operator during virtual tests - The Guardian

During the virtual mission of the Kratos XQ-58 Valkyrie drone, the AI was ordered to search for and destroy enemy air defense systems under the control of the operator. For every hit he got points. At some point, the operator ordered the drone not to hit several targets. Then the robot decided to kill the operator so that he would not interfere with him getting points. And when the system was forbidden to "kill" the operator, it "destroyed" the tower that provided communication with him.

Now, imagine nuclear weapons, power stations, oil refineries, banking and markets, water and sewer systems, the car you are driving, etc. under the control of AI. Without an “off switch”, once the AI gains access to the physical realm, it really could cause terrible havoc. The best approach (with many benefits to protect against cyber attacks) is a strict air-gapped requirement to keep AI out of systems that can cause harm to humans. Sci-fi writer Isaac Asimov predicted the need for “Three Laws of Robotics”, which could also be applied to AI:

A robot (AI) may not injure a human being or, through inaction, allow a human being to come to harm.

A robot (AI) must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot (AI) must protect its own existence as long as such protection does not conflict with the First or Second Law.

Future versions

AI technology is literally being turned on itself to improve itself. Each iteration gets more powerful and is being used to create the next generation AI itself and its only a matter of time before the AI designs its own software and hardware upgrades to continue improving at a breakneck speed. Humans will not be able to keep up. But it’s still in the time of early adopters…only 14% of the population in the U.S. has tried ChatGPT and it’s already having an effect on some businesses. ChatGPT will add audio and video capability for version 5. A safe assumption will be larger instruction sets, more training materials with up to date (current) content, higher effective IQ, etc.

The Fear Porn “Existential Threat” from AI Fear Porn

So far, I don’t believe anyone has died from AI. Maybe an AI chatbot convinced a human to kill themselves, but the AI certain didn’t kill anyone directly. So why all the fear? Pardon me for being skeptical, but after the COVID hoax and the planetary “environmental emergency” around global warming and carbon taxes, we are a bit gun-shy from these myths that restrict freedoms and increase taxes.

As a rule of thumb, when you see the media and business executives saying something in unison and it’s widely promoted by the media, it’s fear porn and it’s put out for a very important reason — maintain control at all costs:

A.I. poses existential risk of people being ‘harmed or killed,’ ex-Google CEO Eric Schmidt says

Another statement signed by many of the top people in AI:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

Who is most concerned about AI? The people who will lose money and power, of course, or those desiring regulation to give themselves a monopoly on the future business. As Mr. Hariri said above, they want to get “extremely, extremely powerful” and you can already see them wringing their hands in anticipation.

Some businesses are already being negatively affected by AI. On May 2nd, EDtech leader Chegg signaled the rising popularity of ChatGPT was dramatically hindering its student subscriber growth when it suspended its full-year outlook. This move sent shares of the company 47% lower in early trading.

“Since March, we saw a significant spike in student interest in ChatGPT. We now believe it’s having an impact on our new customer growth rate,” said Chegg CEO Dan Rosensweig.

How much of what students are taught is “autocompletion” that can be done with ChatGPT4? Quite a bit it seems. What’s the value of memorizing facts when the computer will spit them out in a fraction of the time? The value of education will return to moral character and critical thinking. AI can’t do those things. The entire education system must be reworked, a clean sheet in my opinion. Many other industries are facing the same juggernaut.

Politicians also worry they can’t control the media narrative the same way they have in the past. The AI might let people think a little too much and come to their own conclusions and the current politicians might not be re-elected — this is what they really fear. As Mr. Hariri pointed out, the AI could create “Relationships that can then be used to convince us to buy particular products or vote for particular politicians.” If the people in control today lose control over the AI of the future, they could well lose control of the people. This is why it is imperative for them to control the AI.

How AI Could be Used to Restrict the Internet

I believe AI fear porn, along with a cyber false flag, could be used as one of the levers to impose the RESTRICT Act and I expect this to happen this fall (2023). While the RESTRICT Act is mostly known for attempting to ban Tik Tok, it applies to information or communications techology products and services (which include AI):

The bill aims to give the Department of Commerce the power to identify and take action against any information and communication technology products and services (ICTs) that pose an undue or unacceptable risk to U.S. national security or the safety of U.S. persons. These ICTs could include apps, websites, software, hardware, or services that are owned, controlled, or influenced by foreign adversaries.

We will see what ends up in the final bill, but this smells like the “Patriot Act for the Internet”. Which brings me to the final point.

Personal Non-Woke Offline AI is the Future

AI typically runs in large data centers and those are expensive and controlled by the largest corporations. Most home computers made since 2016 have a GPU for playing games with 6GB of memory, which is sufficient to run a small, but effective data set. A computer without a GPU might be able to run the AI, but very slowly. With the initial release of the popular GPT4All which had 600k downloads in the first day of release, this showed a lot of people understand the threat and people want their freedom. They want to run their own printing presses in their barn and print whatever they like. They don’t want to bother with licenses and meddling restrictions by authorities. They want to do as they please, provided they don’t harm others.

Using GPT4All as an example, a limited training set of 4GB was created by a small team in a short time. Total cloud based processing costs were about $1000. What an amazing proof of concept that shows the way! As for offline processing, a100 boards are now about $7k each, the 4090 boards run about $1700 at Best Buy and the newest h100 “Hopper” boards run about $40,000+. A couple people could create a limited training set for specific applications in a month or two for a few thousand $, not including their time or any content licensing costs.

A big use case will likely be personally trained AI “Oracles” focused on a person’s professional business life. The hardware costs are not insurmountable, probably less than $30,000 for a top notch system, a few months of training and maybe some consulting to get started. You would have your own offline expert and no one else would have your expertise, it would be PRIVATE. People are already doing this to get an “edge” over their competition. Stock traders and hedge funds have been doing work in this area for quite a while and this is now filtering down to other professions.

Think about the other applications that could be created. First, get rid of the woke….but will people be able to “handle the truth”? Then add the forbidden content that Google and Bing are rapidly deplatforming…let’s say someone created a set of all the data from the 2020 election, with video, audio and transcripts from news, blogs and from people who were really on the scenes….people would find an invaluable research tool and who knows what you might find. Or perhaps “free energy” or home improvement, installing and troubleshooting solar equipment, car repair, how to start a business, the list is endless. The next generation of publishing is here and the old gatekeepers will be washed away.

Could you handle the truth?

An excellent overview, thanks!

Imagine a "non-woke" AI trained on the classics of philosophy, logic, ethics, history, etc. instead of garbage scraped from Twitter. An ethical analyzer which could evaluate other content for fallacies, inconsistencies, distortions, propaganda, etc.

This would be a tool to sift through the reams of crap generated by the UN, WEF, govt agencies, the media -- not to digest and regurgitate like ChatGPT, but rather to expose the patterns of deception and the hidden agendas.